While dealing with data, we have all dealt with different kinds of joins, be it inner, outer, left or (maybe)left-semi. This article covers the different join strategies employed by Spark to perform the join operation. Knowing spark join internals comes in handy to optimize tricky join operations, in finding root cause of some out of memory errors, and for improved performance of spark jobs(we all want that, don’t we?). Please read on to find out.

Spark Join Strategies:

Broadcast Hash Join

Before beginning the Broadcast Hash join spark, let’s first understand Hash Join, in general:

As the name suggests, Hash Join is performed by first creating a Hash Table based on join_key of smaller relation and then looping over larger relation to match the hashed join_key values. Also, this is only supported for ‘=’ join.

In spark, Hash Join plays a role at per node level and the strategy is used to join partitions available on the node.

Now, coming to Broadcast Hash Join.

In broadcast hash join, copy of one of the join relations are being sent to all the worker nodes and it saves shuffling cost. This is useful when you are joining a large relation with a smaller one. It is also known as map-side join(associating worker nodes with mappers).

Spark deploys this join strategy when the size of one of the join relations is less than the threshold values(default 10 M). The spark property which defines this threshold is spark.sql.autoBroadcastJoinThreshold(configurable).

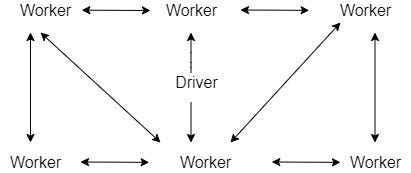

Broadcast relations are shared among executors using the BitTorrent protocol(read more here). It is a peer to peer protocol in which block of files can be shared by peers amongst each other. Hence, they don’t need to rely on a single node. This is how peer to peer protocol works:

Things to Note:

- The broadcasted relation should fit completely into the memory of each executor as well as the driver. In Driver, because driver will start the data transfer.

- Only supported for ‘=’ join.

- Supported for all join types(inner, left, right) except full outer joins.

- When the broadcast size is small, it is usually faster than other join strategies.

- Copy of relation is broadcasted over the network. Therefore, being a network-intensive operation could cause out of memory errors or performance issues when broadcast size is big(for instance, when explicitly specified to use broadcast join/changes in the default threshold).

- You can’t make changes to the broadcasted relation, after broadcast. Even if you do, they won’t be available to the worker nodes(because the copy is already shipped).

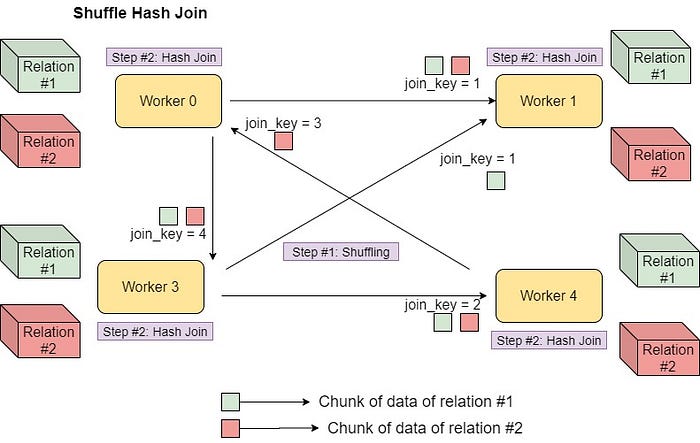

Shuffle hash join

Shuffle Hash Join involves moving data with the same value of join key in the same executor node followed by Hash Join(explained above). Using the join condition as output key, data is shuffled amongst executor nodes and in the last step, data is combined using Hash Join, as we know data of the same key will be present in the same executor.

Things to Note:

- Only supported for ‘=’ join.

- The join keys don’t need to be sortable(this will make sense below).

- Supported for all join types except full outer joins.

- In my opinion, it’s an expensive join in a way that involves both shuffling and hashing(Hash Join as explained above). Maintaining a hash table requires memory and computation.

Shuffle sort-merge join

Let’s first understand Sort-Merge Join

Sort join involves, first sorting the relations based on join keys and then merging both the datasets(think of merge step of merge sort).

Now, let’s understand shuffle sort-merge join strategy in spark:

Shuffle sort-merge join involves, shuffling of data to get the same join_key with the same worker, and then performing sort-merge join operation at the partition level in the worker nodes.

Things to Note:

- Since spark 2.3, this is the default join strategy in spark and can be disabled with

spark.sql.join.preferSortMergeJoin. - Only supported for ‘=’ join.

- The join keys need to be sortable(obviously).

- Supported for all join types.

Cartesian Join

In this strategy, the cartesian product(similar to SQL) of the two relations is calculated to evaluate join.

Broadcast nested loop join

Think of this as a nested loop comparison of both the relations:

for record_1 in relation_1:

for record_2 in relation_2:

# join condition is executedAs you can see, this can be a very slow strategy. This is generally, a fallback option when no other join type can be applied. Spark handles this using BroadcastNestedLoopJoinExec operator that broadcasts the appropriate side of the query, so you can think that at least some chunk of results will be broadcasted to improve performance.

Things to note:

- Supports both ‘=’ and non-equi-joins(‘≤=’, ‘<’ etc.).

- Supports all the join types

How spark selects join strategy?

Taken directly from spark code, let’s see how spark decides on join strategy.

If it is an ‘=’ join:

Look at the join hints, in the following order:

1. Broadcast Hint: Pickbroadcast hash joinif the join type is supported.

2. Sort merge hint: Picksort-merge joinif join keys are sortable.

3. shuffle hash hint: Pickshuffle hash joinif the join type is supported.

4. shuffle replicate NL hint: pickcartesian productif join type is inner like.If there is no hint or the hints are not applicable

1. Pickbroadcast hash joinif one side is small enough to broadcast, and the join type is supported.

2. Pickshuffle hash joinif one side is small enough to build the local hash map, and is much smaller than the other side, andspark.sql.join.preferSortMergeJoinis false.

3. Picksort-merge joinif join keys are sortable.

4. Pickcartesian productif join type is inner .

5. Pickbroadcast nested loop joinas the final solution. It may OOM but there is no other choice.If it’s not ‘=’ join:

Look at the join hints, in the following order:

1. broadcast hint: pickbroadcast nested loop join.

2. shuffle replicate NL hint: pickcartesian productif join type is inner like.If there is no hint or the hints are not applicable

1. Pickbroadcast nested loop joinif one side is small enough to broadcast.

2. Pickcartesian productif join type is inner like.

3. Pickbroadcast nested loop joinas the final solution. It may OOM but we don’t have any other choice.

Comments

Post a Comment